Scientists at University of California Berkeley have demonstrated a striking method to reconstruct words, based on the brain waves of patients thinking of those words.

The method, reported in PLoS Biology, relies on gathering electrical signals directly from patients’ brains.

Based on signals from listening patients, a computer model was used to reconstruct the sounds of words that patients were thinking of.

The technique may in future help comatose and locked-in patients communicate.

Several approaches have in recent years suggested that scientists are closing in on methods to tap into our very thoughts.

In a 2011 study, participants with electrodes in direct brain contact were able to move a cursor on a screen by simply thinking of vowel sounds.

A technique called functional magnetic resonance imaging to track blood flow in the brain has shown promise for identifying which words or ideas someone may be thinking about.

By studying patterns of blood flow related to particular images, Jack Gallant’s group at the University of California Berkeley showed in September that patterns can be used to guess images being thought of – recreating “movies in the mind”.

Now, Brian Pasley of the University of California Berkeley and a team of colleagues have taken that “stimulus reconstruction” work one step further.

“This is inspired by a lot of Jack’s work,” Dr. Brian Pasley said. “One question was… how far can we get in the auditory system by taking a very similar modelling approach?”

The team focused on an area of the brain called the superior temporal gyrus, or STG.

This broad region is not just part of the hearing apparatus but one of the “higher-order” brain regions that help us make linguistic sense of the sounds we hear.

The team monitored the STG brain waves of 15 patients who were undergoing surgery for epilepsy or tumours, while playing audio of a number of different speakers reciting words and sentences.

The trick is disentangling the chaos of electrical signals that the audio brought about in the patients’ STG regions.

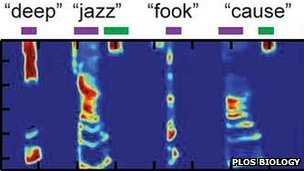

To do that, the team employed a computer model that helped map out which parts of the brain were firing at what rate, when different frequencies of sound were played.

With the help of that model, when patients were presented with words to think about, the team was able to guess which word the participants had chosen.

The scientists were even able to reconstruct some of the words, turning the brain waves they saw back into sound on the basis of what the computer model suggested those waves meant.

“There’s a two-pronged nature of this work – one is the basic science of how the brain does things,” said Robert Knight of UC Berkeley, senior author of the study.

“From a prosthetic view, people who have speech disorders… could possibly have a prosthetic device when they can’t speak but they can imagine what they want to say,” Prof. Robert Knight explained.

“The patients are giving us this data, so it’d be nice if we gave something back to them eventually.”

The authors caution that the thought-translation idea is still to be vastly improved before such prosthetics become a reality.

But the benefits of such devices could be transformative, said Mindy McCumber, a speech therapist at Florida Hospital in Orlando.

“As a therapist, I can see potential implications for the restoration of communication for a wide range of disorders,” she said.

“The development of direct neuro-control over virtual or physical devices would revolutionise ‘augmentative and alternative communication’, and improve quality of life immensely for those who suffer from impaired communication skills or means.”